As we embrace 2024, the landscape of application development, delivery, and management continues to evolve rapidly. The journey of Cloud Native at Pure Storage has been pivotal in shaping this transformation. Let’s revisit our milestones and gear up for an exciting future.

Journey So Far: A Recap

- 2017-2020: The Advent of DevOps Integration

Focus: Connecting our array with DevOps practices.

Achievement: Streamlining operations and enhancing efficiency. - 2021: Kubernetes and Disaster Recovery

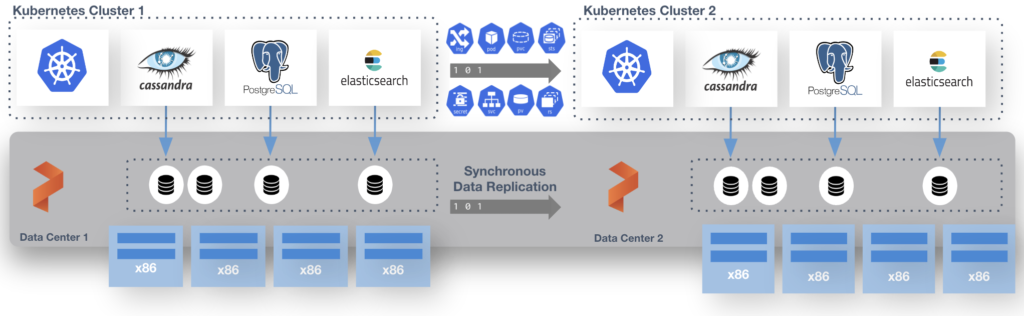

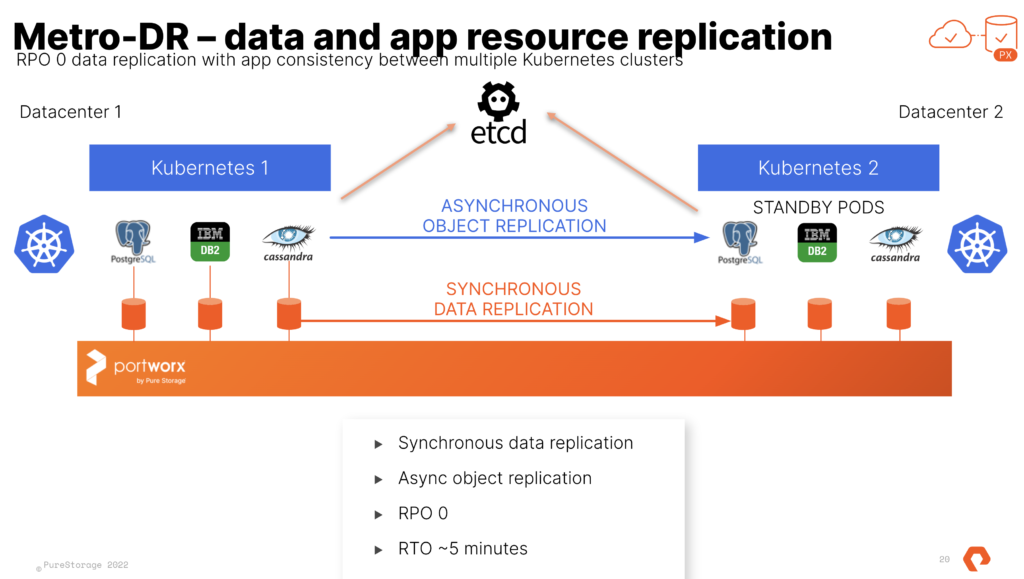

Focus: Providing robust storage and disaster recovery solutions for Kubernetes environments.

Achievement: Enabling more resilient and scalable applications. - 2022: Embracing Stateful Databases in Kubernetes

Focus: Integrating stateful databases with Kubernetes.

Achievement: Offering more dynamic and versatile database solutions. - 2023: Kubernetes as a Landing Zone

Focus: Planning for Kubernetes at scale and exploring bare metal solutions.

Achievement: Facilitating growth and scalability in dynamic environments. Explored paths to lower spend on virtualization. - 2024 and Beyond: The Vision Unfolds

As we step into 2024, the landscape is ripe with opportunities and challenges:

Broadcom’s Acquisition of VMware: This major industry move has prompted a reevaluation of workload management strategies. - Emerging Paradigms: Platform Engineering, DevOps 2.0, Agile methodologies, and Infrastructure as Code are now the norm for competitive businesses.

Rise of Gen AI/LLMs: A transformative shift in data leverage, code delivery, and customer experience.

Kubernetes is the Orchestrator of Choice and remains at the forefront, offering unmatched orchestration capabilities, whether on VMs or beyond. It’s pivotal in automating, observing, and providing agility, independent of physical infrastructure, and is crucial in AI development for business needs.

Three Essential Questions for Leaders

VMware Escape Strategy: What’s our five-year plan post-VMware? Let’s not beat around the bush. Every VMware by Broadcom Customer must consider this question. It is also a valid decision to keep things the same.

Evolving DevOps to Platform Engineering: How do we enhance our practices for faster, better outcomes? Continual learning, improvement and change is a key indicator of the leaders in every industry.

Integrating AI: What are our strategies for embedding AI into our solutions securely and swiftly? Will you rebuild your own ChatGPT? Probably no, but a strategy for building LLM’s into your workflow, customer experience and overall roadmap is table stakes in 2024.

Pure Storage in 2024: Your Strategy Partner In 2024, Pure Storage is not just a solution provider but a partner in aligning your strategies with clear, achievable goals. We are committed to positioning your business for success now and in the future.