Category: Kubernetes

Zero RPO for TKG? How to get Synchronous Disaster Recover for your Tanzu Cluster

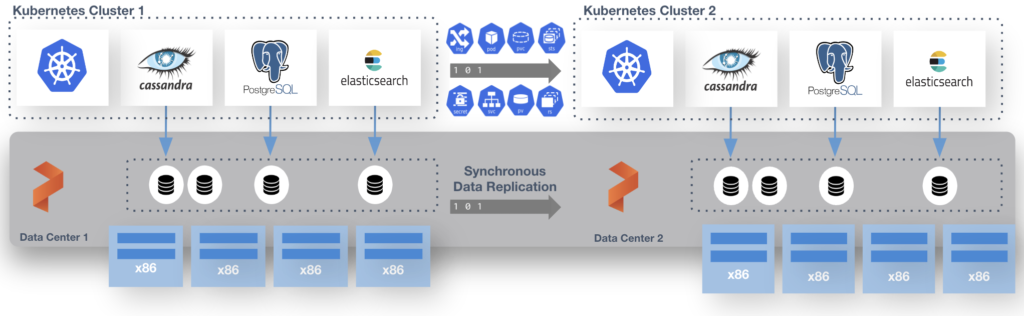

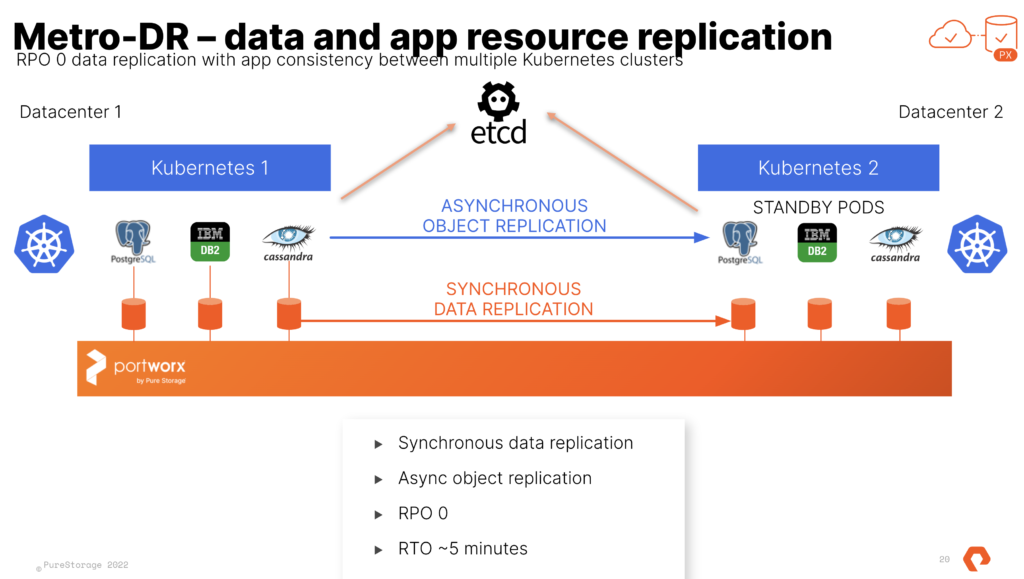

Kubecon and VMware Explore are coming up. One of our most popular sessions from our VMware Explore(and VMworld) is the Stretched Cluster for VMware/vVols. Now, you all may notice that SRM and other DR solutions do not work with Tanzu, but I want all of you to know that PX-DR Sync or Metro-DR is supported for Tanzu. This allows you to have ZERO RPO when failing Stateful workloads from 1 cluster to another. This can be from one vSphere cluster to another each running TKG.

More information for how to setup Sync-DR with Tanzu can be found here in our docs page.

https://docs.portworx.com/operations/operate-kubernetes/disaster-recovery/px-metro/

Pay close attention to the docs as Tanzu has some special steps in the setup because of the way the Cloud Drives are created and managed with raw CNS volumes.

This is done with a shared etcd between the two distinct TKG clusters. That etcd can run at a third site where you would run the “witness node”. I run this in a standalone admin k8s cluster that runs all my internal services like etcd, externaldns, harbor and more. Just so you know this etcd is used by Portworx Enterprise only and is not the one used by k8s.

At the end of the process you have 2 TKG Clusters and 1 Portworx Cluster. We use Async schedules to copy the objects between clusters. The data is synchronously copied between nodes only limited by the latency. (Max for sync-dr is 10ms). This means the deployment for Postgres or Cassandra in the picture above is copied on a schedule and the non-live or target cluster is scaled to 0 replicas. The RPO is 0 since the data is copied instantly, the RTO is based on how fast you can spin up the replicas on the target.

Even though Portworx Enterprise and Metro-DR works with any storage target supported by Tanzu (VSAN, NFS Datastores, VMFS Datastores, other vVOls). The SPBM and vVols integrations from Pure Storage with the FlashArray are the most used anywhere. The effort for the integration and collaboration betweet Pure and VMware Engineering is amazing. Cody Hosterman and his team have done some amazing things. Metro-DR works great with Pure vVols and is the perfect cloud-native compliment to your stretched vVols VM’s using FlashArray ActiveCluster. If you are interested in using both together let your Pure Storage team know or send me a message on the twitter and I will track them down for you.

Database as a Service Platform on Tanzu with Portworx Data Services

So I am a week or so late but the latest update of Portworx Data Services now officially supports Tanzu. Now I say officially since it did kind of work the whole time. I just can’t declare our support to the world until it passes all the tests from Engineering. So go ahead. The easiest way to get a Database Platform as a Service can now be built on you Tanzu clusters. Go to https://central.portworx.com and contact your local PX team to get access.

The Proof is in the Docs!

https://pds.docs.portworx.com/prerequisites/#_supported_kubernetes_versions

A quick demo of PDS on Tanzu and a couple more of Portworx on Tanzu just for fun.

Using PX-DR and Testing your Disaster Recovery

Remember that draft you made a year ago? Weill look what I found. Using PX-DR to test your application on the Disaster Recover target. If you are not testing your DR, you should get to it!

Test all Kubeconfig Contexts

One day I woke up and had like 14 clusters in my Kubeconfig. I didn’t remember which ones did what or if the clusters even still existed.

kubectl config get-contexts -o name | xargs -I {} kubectl --context={} get nodes -o wideSo I cooked up this command to run through them all and make sure they actually responded. This works for me. If you have an alternative way please share in the comments.

Connecting your Application to Cassandra on PDS

Next up is a way to test Cassandra when deployed with PDS. I saved my python application to GitHub here: https://github.com/2vcps/py-cassandra

The key here is to deploy Cassandra via PDS then get the server connection names from PDS. Each step is explained in the repo. Go over there and fork or clone the repo or just use my settings. A quick summary though (it is really this easy).

- Deploy Cassandra to your Target in PDS.

- Edit the env-secret.yaml file to match your deployment.

- Apply the secret. kubectl -n namespace apply -f env-secret.yaml

- Apply the deployment. kubectl -n namespace apply -f worker.yaml

- Check the database in the Cassandra pod. kubectl -n namespace exec -it cas-pod — bash

- Use cqlsh to check the table the app creates.

That is it pretty easy and it creates a lot of records in the database. You could also scale it up in order to test connections from many sources. I hope this helps you quickly use PDS and if you have any updates or changes to me repo please submit a PR.

Testing Apache Kafka in Portworx Data Services

With the GA of Portworx Data Services I needed a way to connect some test applications with Apache Kafka. Kafka is one of the most asked for Data Services in PDS. Deploying Kafka is very easy with PDS but I wanted to show how it easy it was for a data team to connect their application to Kafka in PDS. I was able to find a kafka-python library, so I started working on a couple of things.

- A python script to create some kind of load on Kafka.

- Containerize it, so I can make it easy and repeatable.

- Create the kubernetes deployments so it is quick and easy.

This following github repo is the result of that project.

https://github.com/2vcps/py-kafka

See the repo for the steps on setting up the secret and deployments in K8s to use with your PDS Kafka, honestly it should work with any Kafka deployment where you have the connection service, username and password.

Check out the youtube demo I did above to see it all in action.

Portworx Data Services GA: An Admins View, So easy you won’t believe it…

Portworx Data Services (PDS) the DBaaS platform built on the Portworx Enterprise platform is One Platform for All Databases. This SaaS platform can work with your platform in the Cloud or in your datacenter. Check out this demo of some of the Admin tasks available.

The good part other than add your DB consumers and your target Clusters to run workloads, the rest is configured for you. The power in the platform is you can change many settings but for the best practices are already put in place for you. Now you can have all databases with just one API and one UI.

You no longer need to learn an Operator or Custom resources for every different data service your Developers, Data Architects and DBA’s ask for. Also your data teams don’t learn K8s, They work with databases without having to every become platform exports. Just one API, One UI.

One Platform. All Databases.

Collection of PDS Links (as of May 18, 2022)

Blog from Umair Mufti on should you use DBaaS or DIY

https://blog.purestorage.com/perspectives/dbaas-or-diy-build-versus-buy-comparison/

How to deploy Postgres via Bhavin Shah with video demo

https://portworx.com/blog/accelerate-data-services-deployment-on-kubernetes-using-portworx-data-services/

Deploying Postgres via PDS by Ron Ekins step by step details

https://ronekins.com/2022/05/18/portworx-data-services-pds-and-postgresql/

Portworx Data Services page on Purestorage.com

https://www.purestorage.com/enable/portworx/data-services.html

PDS is GA Announcement from Pure Storage.

https://www.purestorage.com/company/newsroom/press-releases/pure-boosts-developer-productivity-expanding-portworx-portfolio.html

Migrating existing PostgreSQL into Managed PostgreSQL in PDS

https://portworx.com/blog/migrating-postgresql-to-portworx-data-services/

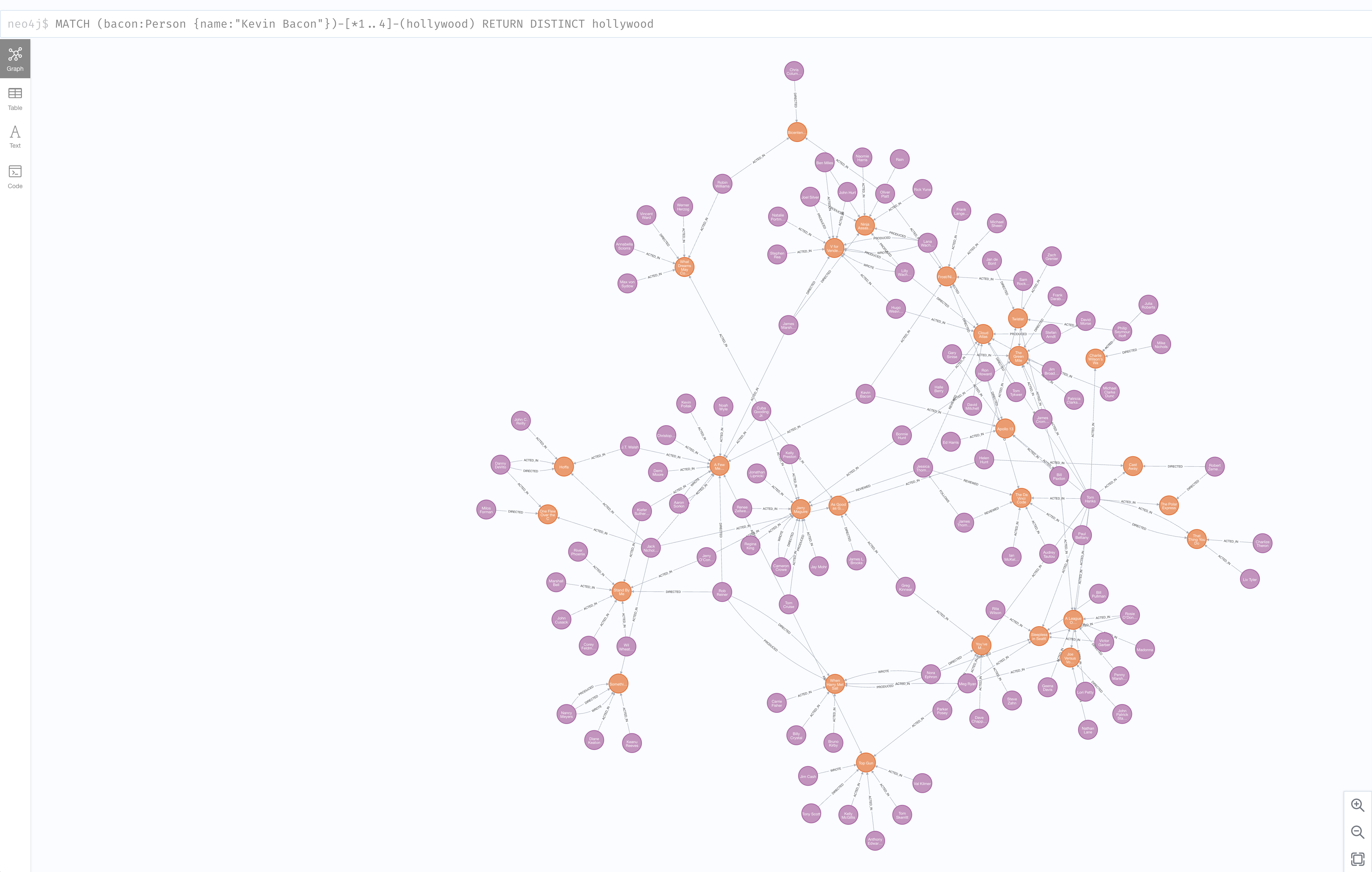

Be Awesome with Neo4j Graph Database In Kubernetes

Graph DB solution Neo4j is popular with Data Scientists and Data Architects trying to make connections of the nodes and relationships. Neo4j is able to do memory management other in memory operations to allow for efficiency and performance. All of that data needs to eventual persist to a data management platform. This is why I was first asked about Neo4j.

There are Community and Enterprise Editions like most software solutions these days and the robust enterprise type functions land in the Enterprise Edition. Things like RBAC and much higher scale.

Neo4j provides a repo of Helm charts and some helpful documentation on running the Graph Database in Kubernetes. Unfortunately, the instructions stop short of many of the K8s flavors supported by the cloud and on premises solutions. Pre-provisioning cloud disks might be great for a point solution of a single app. Most of my interactions with the people running k8s in production are building Platform as a Service (PaaS) or Database as a Service (DBaaS). Nearly all at least want the option of building these solutions to be hybrid or multi cloud capable. Additionally, DR and Backup are requirements to run in any environment that values their data and staying in business.

Portworx in 20 seconds

Portworx is a data platform that allows stateful applications such as Neo4j to run on an Cloud, on Premises Hardware on any K8s Distribution. It was built from the very beginning to run as a container for containers. </end commercial>

In this blog post I want to enhance and clarify the documentation for the Neo4j helm chart so that you can easily run the community or Enterprise Editions in your K8s deployment.

As with any database Neo4j will benefit greatly from running the persistence on Flash. All of my testing was done with a Pure Storage FlashArray.

Step 1

Already have K8s and Portworx installed. I used Portworx 2.9.1.1 and Vanilla K8s 1.22. Also already have Helm installed.

Note: The helm chart was giving me trouble until I updated helm to version 3.8.x

Step 2

Add the Neo4j helm repo

helm repo add neo4j https://helm.neo4j.com/neo4j

helm repo updateStep 3

Create a neo4j storage class

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: neo4j

provisioner: pxd.portworx.com

parameters:

repl: "2"

io_profile: db_remote

kubectl apply -f neo4j-storageclass.yamlVerify the Storage Class is available

kubectl get scStep 4

Create your values.yaml for your install.

values-standalone.yaml

neo4j:

resources:

cpu: "0.5"

memory: "2Gi"

# Uncomment to set the initial password

#password: "my-initial-password"

# Uncomment to use enterprise edition

#edition: "enterprise"

#acceptLicenseAgreement: "yes"

volumes:

data:

mode: "dynamic"

# Only used if mode is set to "dynamic"

# Dynamic provisioning using the provided storageClass

dynamic:

storageClassName: "neo4j"

accessModes:

- ReadWriteOnce

requests:

storage: 100GiCluster values.yaml

node[0-x]values-cluster.yaml

Why do I say 0-x? Well neo4j requires a helm release for each core cluster node (read more detail in the neo4j helm docs). Each file for now is the same. Note: neo4j is an in memory database. 2Gi ram is great for the lab, but for really analytics use I would hope to use much more memory.

neo4j:

name: "my-cluster"

resources:

cpu: "0.5"

memory: "2Gi"

password: "my-password"

acceptLicenseAgreement: "yes"

volumes:

data:

mode: "dynamic"

# Only used if mode is set to "dynamic"

# Dynamic provisioning using the provided storageClass

dynamic:

storageClassName: "neo4j"

accessModes:

- ReadWriteOnce

requests:

storage: 100GiRead Replica values

Create another helm yaml file here is rr1-values-cluster.yaml

neo4j:

name: "my-cluster"

resources:

cpu: "0.5"

memory: "2Gi"

password: "my-password"

acceptLicenseAgreement: "yes"

volumes:

data:

mode: "dynamic"

# Only used if mode is set to "dynamic"

# Dynamic provisioning using the provided storageClass

dynamic:

storageClassName: "neo4j"

accessModes:

- ReadWriteOnce

requests:

storage: 100GiDownload all the values.yaml from GitHub

Step 5

Install Neo4j (stand alone)

Standalone

The docs say to run this:

helm install my-neo4j-release neo4j/neo4j-standalone -f my-neo4j.values-standalone.yamlI do the following and I’ll explain why.

helm install -n neo4j-1 neo4j-1 neo4j/neo4j-standalone -f ./values-standalone.yaml --create-namespaceCluster Install

For each cluster node values.yaml

helm install -n neo4j-cluster neo4j-cluster-3 neo4j/neo4j-cluster-core -f ./node1-values-cluster.yaml --create-namespaceYou need a minimum of 3 core nodes to create a cluster. So you must run the helm install command 3 times for the neo4j-cluster-core helm chart.

Successful Cluster Creation

kubectl -n neo4j-cluster exec neo4j-cluster-0 -- tail /logs/neo4j.log

2022-03-29 19:14:55.139+0000 INFO Bolt enabled on [0:0:0:0:0:0:0:0%0]:7687.

2022-03-29 19:14:55.141+0000 INFO Bolt (Routing) enabled on [0:0:0:0:0:0:0:0%0]:7688.

2022-03-29 19:15:09.324+0000 INFO Remote interface available at http://localhost:7474/

2022-03-29 19:15:09.337+0000 INFO id: E2E827273BD3E291C8DF4D4162323C77935396BB4FFB14A278EAA08A989EB0D2

2022-03-29 19:15:09.337+0000 INFO name: system

2022-03-29 19:15:09.337+0000 INFO creationDate: 2022-03-29T19:13:44.464Z

2022-03-29 19:15:09.337+0000 INFO Started.

2022-03-29 19:15:35.595+0000 INFO Connected to neo4j-cluster-3-internals.neo4j-cluster.svc.cluster.local/10.233.125.2:7000 [RAFT version:5.0]

2022-03-29 19:15:35.739+0000 INFO Connected to neo4j-cluster-2-internals.neo4j-cluster.svc.cluster.local/10.233.127.2:7000 [RAFT version:5.0]

2022-03-29 19:15:35.876+0000 INFO Connected to neo4j-cluster-3-internals.neo4j-cluster.svc.cluster.local/10.233.125.2:7000 [RAFT version:5.0]Install Read Replica

The cluster must be up and functioning to install the read replica.

helm install -n neo4j-cluster neo4j-cluster-rr1 neo4j/neo4j-cluster-read-replica -f ./rr1-values-cluster.yamlInstall the Loadbalancer

To access neo4j from an external source you should install the loadbalancer service. Run the following command in our example.

helm install -n neo4j-cluster lb neo4j/neo4j-cluster-loadbalancer --set neo4j.name=my-clusterWhy the -n tag?

I provide the -n with a namespace and the –create-namespace tag because it allows me to install my helm release in this case neo4j-1 into its own namespace. Which helps with operations for DR, Backup and even lifecycle cleanup down the road. When installing a cluster all the helm releases must be in the same namesapce.

Start Graph Databasing!

As you can see there are plenty of tutorials to see how you may use Neo4j

Some other tips:

https://neo4j.com/docs/operations-manual/current/performance/disks-ram-and-other-tips/

See below for detials of the PX Cluster

Status: PX is operational

Telemetry: Disabled or Unhealthy

License: Trial (expires in 31 days)

Node ID: ade858a2-30d4-41ba-a2ce-7ee1f9b7c4c0

IP: 10.21.244.207

Local Storage Pool: 1 pool

POOL IO_PRIORITY RAID_LEVEL USABLE USED STATUS ZONE REGION

0 HIGH raid0 297 GiB 12 GiB Online default default

Local Storage Devices: 2 devices

Device Path Media Type Size Last-Scan

0:1 /dev/mapper/3624a937081f096d1c1642a6900d954aa-part2 STORAGE_MEDIUM_SSD 147 GiB 29 Mar 22 16:21 UTC

0:2 /dev/mapper/3624a937081f096d1c1642a6900d954ab STORAGE_MEDIUM_SSD 150 GiB 29 Mar 22 16:21 UTC

total - 297 GiB

Cache Devices:

* No cache devices

Journal Device:

1 /dev/mapper/3624a937081f096d1c1642a6900d954aa-part1 STORAGE_MEDIUM_SSD

Cluster Summary

Cluster ID: px-fa-demo1

Cluster UUID: 76eaa789-384d-4af2-b476-b9b3fd7fdcab

Scheduler: kubernetes

Nodes: 8 node(s) with storage (8 online)

IP ID SchedulerNodeName Auth StorageNode Used Capacity Status StorageStatus Version Kernel OS

10.21.244.203 f917d321-3857-4ee1-bfaf-df6419fdac53 pxfa1-3 Disabled Yes 12 GiB 297 GiB Online Up 2.9.1.3-7769924 5.4.0-105-generic Ubuntu 20.04.4 LTS

10.21.244.209 c3bea2dc-10cd-4485-8d02-e65a23bf10aa pxfa1-9 Disabled Yes 12 GiB 297 GiB Online Up 2.9.1.3-7769924 5.4.0-105-generic Ubuntu 20.04.4 LTS

10.21.244.204 b27e351a-fed3-40b9-a6d3-7de2e7e88ac3 pxfa1-4 Disabled Yes 12 GiB 297 GiB Online Up 2.9.1.3-7769924 5.4.0-105-generic Ubuntu 20.04.4 LTS

10.21.244.207 ade858a2-30d4-41ba-a2ce-7ee1f9b7c4c0 pxfa1-7 Disabled Yes 12 GiB 297 GiB Online Up (This node) 2.9.1.3-7769924 5.4.0-105-generic Ubuntu 20.04.4 LTS

10.21.244.205 a413f877-3199-4664-961a-0296faa3589d pxfa1-5 Disabled Yes 12 GiB 297 GiB Online Up 2.9.1.3-7769924 5.4.0-105-generic Ubuntu 20.04.4 LTS

10.21.244.202 37ae23c7-1205-4a03-bbe1-a16a7e58849a pxfa1-2 Disabled Yes 12 GiB 297 GiB Online Up 2.9.1.3-7769924 5.4.0-105-generic Ubuntu 20.04.4 LTS

10.21.244.206 369bfc54-9f75-45ef-9442-b6494c7f0572 pxfa1-6 Disabled Yes 12 GiB 297 GiB Online Up 2.9.1.3-7769924 5.4.0-105-generic Ubuntu 20.04.4 LTS

10.21.244.208 00683edb-96fc-4861-815d-a0430f8fc84b pxfa1-8 Disabled Yes 12 GiB 297 GiB Online Up 2.9.1.3-7769924 5.4.0-105-generic Ubuntu 20.04.4 LTS

Global Storage Pool

Total Used : 96 GiB

Total Capacity : 2.3 TiBPX Essentials for FA (2.9.0)! How to install it?

This week Portworx Enterprise 2.9.0 released with it comes support for K8s 1.22 and the new Essentials for FlashArray License. You can now have more nodes and capacity and as many clusters as you like. Previously you only got 5 nodes and a single cluster for PX Essentials. More info in the release notes:

https://docs.portworx.com/reference/release-notes/portworx/#2-9-0

How to install your PX License

- Install the prerequisites for connecting your worker nodes with either iSCSI or Fiber Channel. https://docs.portworx.com/cloud-references/auto-disk-provisioning/pure-flash-array/

- Go to https://central.portworx.com and start to generate the spec

- While creating the spec you will get the commands to run with kubectl to create the px-pure-secret. This secret takes the pure.json file and puts it in a place where the Portworx Installer will use it to provision it’s block devices from FlashArray. How to create the pure.json file? More info here: https://docs.portworx.com/cloud-references/auto-disk-provisioning/pure-flash-array/#deploy-portworx

- Apply the Operator and the Storage Cluster. Enjoy your new data platform for Kubernetes.

- When Portworx detects the drives are coming from a FlashArray the License is automatically set! Nothing special to do!

Watch the walkthrough

PX + FA = ❤️

First, the ability to use Portworx with FlashArray is not new with 2.9.0. What is new is the automatic Essentials license. Of course you would have benefits of upgrading Essentials to Enterprise to get DR, Autopilot and Migration. Why would you want to run Portworx on the FlashArray? The central storage platform from the FlashArray give all the benefits you are used to with Pure but to your stateful workloads. All Flash Performance. No disruptive upgrade everything. Evergreen. Dedupe and compression with no performance impact. Thin provisioning. Put it this way? The same platform you run baremetal and vm based business critical apps? Oracle, MS SQL?Shouldn’t it run your modernized cloud native versions of these applications? (Still can be containerized Oracle or SQL so don’t @ me.) Together with Portworx it just makes sense to be better together.

PX + FB = ❤️

Don’t get confused. While the PX Store layer needs block storage to run, FlashBlade and Portworx has already been supported as a Direct Attach target for a while now. You may even notice in the demo above my pure.json includes my FlashBlade. So go ahead use them all.